Google recently released an algorithm that will make JPEGs up to 35% smaller with less artifacts. As the creator of an open source image hosting platform I wanted to test its performance in the real world.

Note: Google says the ideal way to use Guetzli is on uncompressed and really large images. On image hosting sites users upload usually only small(er) images so the results will probably not go up to 35% but let's find out.

Let's convert all images on PictShare

PictShare uses a smart query system so by changing the URL to an image, it will automatically resize, add filters, etc. So PictShare creates a copy for each modified image. I will include all commands I used so all other PictShare admins can test it for themselves.

# Get all JPEGs and save the list in a file

cd /path/to/your/pictshare/upload;

find . -mindepth 2 | grep '.jpg$' > jpglist.txtLet's just see how many images we have and how large they are now

cat jpglist.txt | wc -l #10569

du -ch `cat jpglist.txt` | tail -1 | cut -f 1 # 3.7GSo we have 10569 JPEG images in total 3.7G large

Let's convert them and see what happens

mkdir /tmp/guetzli

tmp="/tmp/guetzli"

while read path

do

b=$(basename $path)

guetzli $path "$tmp/$b"

done < jpglist.txtWhat they don't tell you: Guetzli is slow

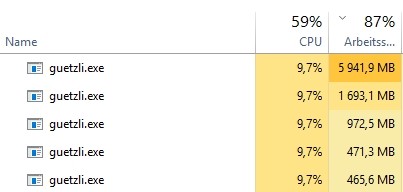

For now, Guetzli only uses one CPU thread and is very slow. Even small images with 800x600 pixels take about 10-20 seconds on an i7 5930K @4GHZ. It also uses up to 6 gigabytes of RAM per image.

I split the conversion script in 9 parts (so the system still has some threads left) and let my spare server run the conversion exclusively.

The Results

Converting 10569 images with Guetzli took my Xeon Server with two physical CPUs and 16 threads 50 hours.

With the following script I tested if all images were valid images. Interestingly, 72 of the Guetzli images (less than 1 percent) were not. Most of them were not created or hat 0 bytes because guetzli crashed. I re-ran all missing images, crashed again.. odd.

orig_failed=0

converted_failed=0

while read path

do

orig="toconvert/$path"

out="converted/$path"

if [[ ! $(file -b $orig) =~ JPEG ]]; then

orig_failed=$((orig_failed + 1))

echo "[$orig_failed] $orig is not a valid JPEG"

fi

if [[ ! $(file -b $out) =~ JPEG ]]; then

converted_failed=$((converted_failed + 1))

echo "[$converted_failed] $out is not a valid JPEG"

fi

done < jpglist.txt

echo ""

echo "Results:"

echo "$orig_failed of the original images are not valid JPEG images"

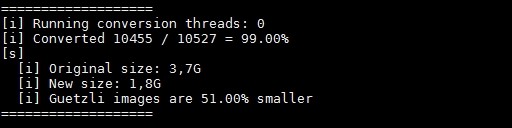

echo "$converted_failed of the converted images are not valid JPEG images"- The original 10569 images had a size of 3.7G (3915306882 Bytes). Average 361KiB per image

- The guetzli images have a size of 1.8G ( 1908475014 Bytes). Average 176.34 KiB per image

Guetzli images were in total a whooping 51% smaller

Examples

Some images were even more impressive than that.

This was not an isolated case, I used the following script to analyze the images and the results were amazing.

In the end 18% (1940 of 10569) of the converted images were 60% (or more) smaller than the original files. I'm beginning to think this might have something todo with the phpGD library, which creates the JPEGs on PictShare.

# Note you'll need "identify" first.

# Install with: apt-get install imagemagick

while read path

do

orig="toconvert/$path"

conv="converted/$path"

echo > conversionlist.csv

if [ -f $orig ] && [ -f $conv ]; then

orig_size=$( du $orig | cut -f1 )

conv_size=$( du $conv | cut -f1 )

percent=$( echo "scale=2;100 - (($conv_size / $orig_size) * 100)" | bc -l )

percent=${percent%.*}

dimensions=$( identify -format '%wx%h' $conv )

if [[ $percent -gt 60 ]]; then #only print if the new image is 60% smaller than the original

printf "$path is $percent%% smaller\t$conv_size instead of $orig_size\t Dimensions: $dimensions\n"

echo "$path;$percent;$dimensions" >> conversionlist.csv

fi

fi

done < jpglist.txtConclusio

Since not all images could be converted, we have a 1% error margin.

The computation time is massive but if done in background it might be not a problem. I have no idea why Guetzli works better than Google promised even though most images were not high quality images, but the results speak for themselves.

If you are a image hoster and you have spare CPU cycles (lots of them), Guetzli can save you good money in storage and it seems if your images were created with PHPgd your images will get much smaller.

Comment using SSH! Info